This page is outdated. For recent results check my publications page or the ArthroNav Project website

Please check the Arthronav Project for recent results in Computer Assisted Surgery

This research topic follows from recent collaboration with the Orthopedic Service of ``Hospitais da Universidade de Coimbra''. The minimally invasive reconstruction of the Anterior Cruciate Ligament (ACL) is a difficult and error prone procedure that requires highly trained surgeons. Our goal is to process the endoscopic video in order to improve practioner's perception and navigation skill inside the knee joint. I am interested in different aspects of this problem such as sensor and illumination modeling, partial 3D reconstruction, and registration of pre-operative 3D models.

I am interested on the problem of robust reconstruction of dynamic scenes from a set of views acquired by a calibrated camera network. A robust solution for this problem will enable the development of an endeless number of applications such as 3D video and tele-immersion. I am interested on the following topics: wide area camera network calibration, scalable algorithms for multi- view reconstruction, reconstruction beyond the Lambertian assumption, and acceleration of algorithms using GPGPU.

EasyCal Toolbox: The movies show the results obtained with the EasyCal toolbox in the problem of calibrating of a synchronous wide area camera network with 52 nodes/cameras. The software used image correspondences obtained by randomly moving a laser in the dark. The EasyCal toolbox has the unique feature of being able to estimate the lens radial distortion in symultaneous with the intrinsic and extrinsic parameters without requiring non linear optimization. The first version of the software was developed in Matlab and calibrated a large dimension network (52 cameras) with less than 3 minutes runtime in a conventional PC. You can see on the top a movie showing the camera network (GRASP Lab, University of Pennsylvania), and the estimated camera layout. On the bottom I am showing a multi-view reconstruction of the room using the calibration results of EasyCal (the reconstruction is a courtesy of Xenophon Zabulis). I expect to have available soon a second version of the EasyCal toolbox.

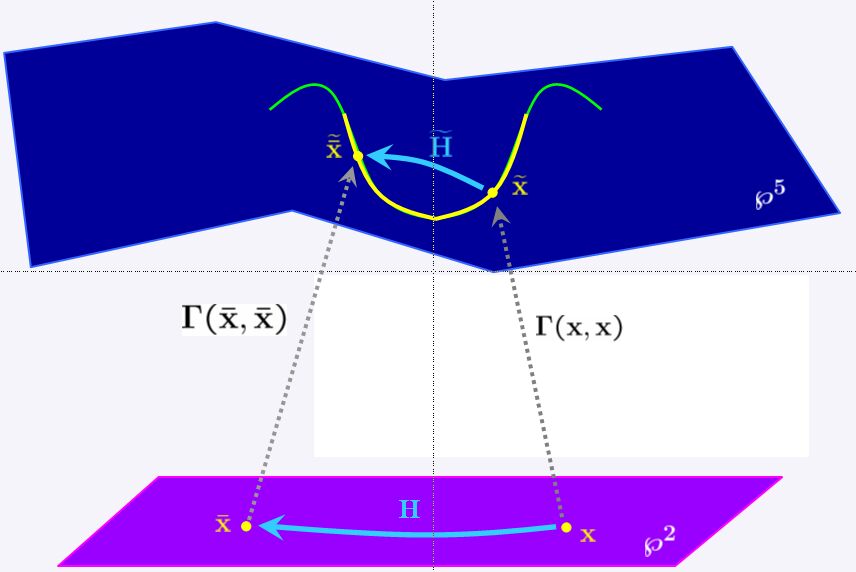

The conventional perspective camera is an example of a central projection system where every light ray goes through a single 3D point (the projection center). There are other types of central projection systems (e.g. catadioptric sensors, cameras with fish-eye lens) that do not follow the pin-hole model. The images acquired by these sensors present non-linear distortions that preclude the application of standard algorithms and techniques. I am trying to cope with these non-linearities by using lifted representations. The idea is to embed the projective plane in a higher dimensional space in order to better understand the geometric relations (e.g. the epipolar geometry between views)

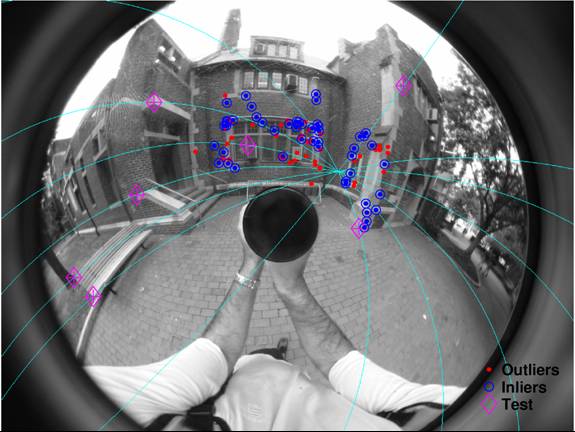

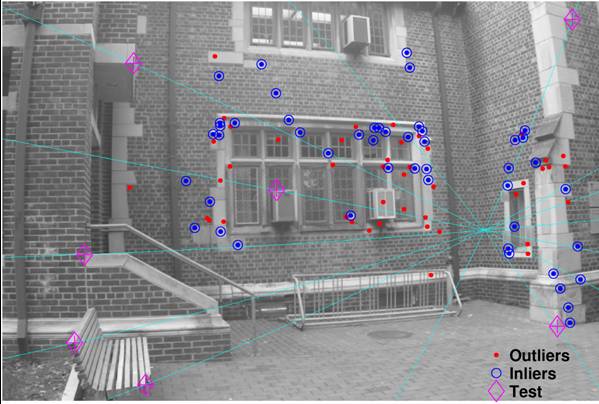

I am showing below the results in estimating a lifted fundamental matrix between a view acquired by a central paracatadioptric sensor and a camera with significant lens distortion. The camera calibration is unknown and the only data are the correspondences obtained using SIFT features.

From the lifted fundamental matrix it is possible to extract the distortion parameters and correct the image

I am interested in vision sensors enabling real time acquisition of panoramic images. I work with central catadioptric sensors that are vision systems that combine mirrors and lenses (dipotrics) to achieve enhanced fields of view while keeping a single effective viewpoint. My research focuses on geometric modeling of the image formation, auto-calibration, development of efficient image processing algorithms, and visual servoing. As a result of this work we developed a calibration toolbox for paracatadioptric cameras using line images. Below I am showing an example where the video on the right is generated from the perspective rectification of the omnidirectional image on the left.

I have worked during some years in active vision platforms. This is a multidisciplinary field where I have acquired skills and experience in topics such hardware and system design, control theory, and real time computer vision. You can see on the right the MVS system for multi-target tracking, and on the left binocular tracking using the MDOF robot head.